Jensen Huang, founder of the world’s biggest AI chipmaker, has an unofficial motto that dates back to his early years in video games: “Our company is 30 days from going out of business”.

So what? This has never been less true. This week’s quarterly numbers from Nvidia were so startling even by tech standards that they added the equivalent of a whole Netflix to the company’s market value when Wall Street opened yesterday.

They also answered – for now – the question of whether the AI boom is real or a bubble. It's real.

- For the last three months of last year Nvidia earned on average $263 million a day.

- That was three times what Exxon earned and three times what Nvidia earned in the same period in 2022; $22 billion in all.

- Huang had to admit that far from going out of business, “a whole new industry” was being formed on the back of his chips, which governments and the rest of Silicon Valley are queueing up to buy at $40,000 apiece.

- He expects his firm to earn another $24 billion by April.

Chips with everything. Nvidia’s chips train the AI-powered large language models (LLMs) needed to compete in the global rush to optimise and automate every process, chore and challenge that could possibly benefit from the help of a computer thinking somewhat like a human, from paralegalling to protein modelling.

- It dominates the field, with 87 per cent of the market and 70 per cent margins.

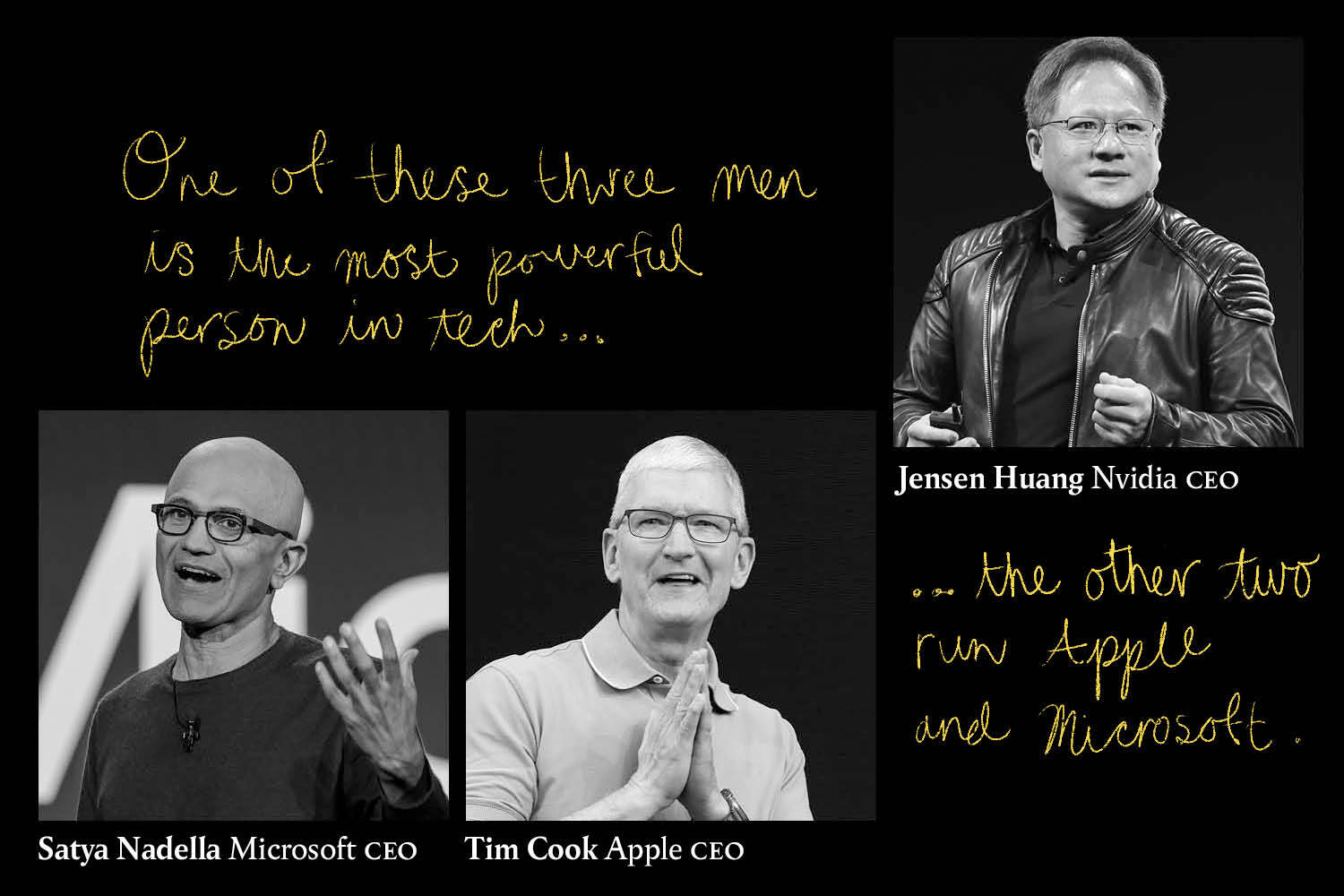

- Its $1.67 trillion market cap amounts to $76 million for each of its 22,000 staff and makes it the third biggest company in the world after Microsoft and Apple.

If the race to adopt AI were an arms race, there’d be only one arms dealer. One of Nvidia’s lesser competitors accused it last year of extorting its customers. Huang responded: “The more you buy, the more you save.”

300 million – jobs that could be replaced by AI worldwide, according to Goldman Sachs last year

$700 billion – value added to the company so far this year, accounting for a quarter of all gains for the S & P 500

$4 billion – Nvidia’s earnings for the whole of 2014

Wednesday’s results beat expectations and sent a series of messages to markets and the AI world, which increasingly resembles the actual world.

On hype: it’s still early days in the AI hype cycle. Nvidia’s shares are up 765 per cent year on year, putting them in bubble territory if AI chips were like tulips or 1990s dot.com networking equipment. But customers are buying them to boost productivity rather than to speculate and markets are rewarding them at least partly on that basis. NYU’s Scott Galloway says pre-orders of Nvidia’s Enterprise GPUs (graphics processing units) have become a proxy measure of AI investment.

On hope: new recruits worry they may be joining the company as its stock price peaks, and some analysts think most of its future growth is now priced in. Others see AI chip demand outstripping supply for years to come, making Nvidia still “cheap”.

On hardware: Nvidia sells the brute processing power needed to put LLMs and much else in the cloud. Tim Gordon of Best Practice AI says. “Cloud compute is where the money in AI is going - lots and lots of compute and you can blow the doors off. Nvidia owns the gunpowder factory.”

Who’s buying? Microsoft is Nvidia’s biggest corporate customer but Saudi Arabia and the UAE are spending heavily with sovereign funds on all the H100s they can get. Inflection AI raised $1.3 billion last year to spend mainly on H100 clusters. China is barred from buying H100s and next-gen H200s due on the market in the spring.

Who’s selling? Google, Meta, Apple and Intel all envy Nvidia and are expanding their own AI chip-making capabilities. But they’re all Nvidia customers, and Nvidia has a ten-year head start.

Who cares?

- The UK, which added itself this week to a list of countries keen to vet the company’s role promoting or squashing competition.

- France, where officials raided company offices last year over concerns about anti-competitive behaviour.

- Climate activists, who say LLMs’ colossal electricity use is unsustainable.

The bitter lesson, as Rich Sutton wrote in a famous 2019 essay, is that the human factor doesn’t help: “Building in how we think we think doesn’t work in the long run.” It’s the compute, stupid.